This video shows a cheap RC car hacked to be controlled by the Raspberry PI. It is completely autonomous, it uses a webcam to turn and point the light. The computation is made with the OpenCV libraries.

Author: Daniele Nicassio

Building a Raspberry PI Car Radio

Today i’m going to write about my new project, a Raspberry PI powered radio car. For now it can provide only basic functionalities, but it can be easily improved by upgrading the software, on which i didn’t focus very much so far.

In this post i will explain how I configured the PI to accomplish the task.

What I used in this project?

- a Raspberry PI

- a standard 16×2 character LCD display (HDD44780 compatible)

- usb speakers

- a button

- a wheel from an old mouse

- a box

The idea

The idea is to make a car radio capable of playing music from a usb pen plugged in the PI. My radio can only shuffle the songs found, but that’s just about software, it can be improved in many ways, that is where a lot of customization may take place.

Getting the display working with the PI

The first thing to do is to understand how to drive the LCD display from the PI. This is made by connecting the display to the PI’s GPIO, and then sending the commands from the programming language, usually using a library for the HDD44780 standard.

I did this from python, but I won’t touch this part in this post because I followed a nice tutorial about this from Adafruit.

After I was able to write to the display, I wrote a little python script to print something to the display from the command line, which could be useful to write something to the lcd from a shell script later:

#!/usr/bin/python

from Adafruit_CharLCD import Adafruit_CharLCD

import sys

lcd = Adafruit_CharLCD()

lcd.begin(16,1)

lcd.clear()

lcd.message(sys.argv[1])

Playing music through USB speakers

To play music through a USB device, the PI must be configured properly. In my case, I had some operation to do before everything worked, and my speakers are still showing some problems when playing from the PI (I hear a little noise, but i didn’t search enough to tell it can’t be resolved).

Anyway, if you want to use your PI as a car radio, you probably want the USB speakers to be the default audio device for it. You can do this by editing the /etc/modprobe.d/alsabase.conf adding the following line, or modifying the relative line if it already exists with another value (which in raspbian should be the case):

options snd-usb-audio index=0

I had also another problem which seemed to be quite common, and I also had to add another line at the end of that file, to avoid an “expected delay” error which I discovered running dmesg:

options snd-usb-audio nrpacks=3

I choose the higher value which didn’t give me errors in the log. After modifying the file i restarted alsa with

sudo service alsa-utils restart

This wasn’t enough to play music through the speakers, I also had to specify the right device when i played the music with mplayer. I had to discover the name of the device running the following command:

aplay -L

which gives a list of the recognized devices, from which i selected the right one, and finally got mplayer to play some mp3s:

mplayer -ao alsa:device=front my_music_file.mp3

Usb automount and autoplay

Now that the display is working correctly and we can play music through the speakers, let’s figure out how to handle the mounting/umounting actions:

The next step is to configure the PI to automatically mount any usb drive plugged in. This can be done by using the usbmount software avaiable in the raspbian repository:

sudo apt-get install usbmount

Now, I want to autoplay music when the usb is plugged in, so I add a custom script to the default usbmount script folder /etc/usbmount/mount.d :

#!/bin/bash

python /home/pi/lcd/char/print.py "Madre foca:

Playing music.."

sudo -u pi mplayer -slave -input file=/home/pi/fifofile -ao alsa:device=front -shuffle $UM_MOUNTPOINT/* $UM_MOUNTPOINT/*/* $UM_MOUNTPOINT/*/*/*

The first command uses the python script I created before to print something to the LCD when music is starting, while the third line starts the playback.

As you can guess, the $UM_MOUNTPOINT variable contains the path to the mounted drive.

The -slave mplayer option is a very useful way to let you send commands to mplayer even if it’s backgrounded. It tells mplayer to read input commands from a file, but if we provide a fifo file instead of a normal one, mplayer will read from it only when we will write something. This allows mplayer to be controlled by writing to a file, from python, shell or whatever you prefer. To create a fifo file you should use

mkfifo /home/pi/fifofile

If you want to know more on the -slave option of mplayer, see here.

I had to add the sudo -u pi part because it seemed that the user of usbmount wasn’t allowed to read from the fifo, I don’t know exactly why.

Finally, I also created a script for the umount event, which simply killed mplayer, and placed it in the /etc/usbmount/umount.d directory:

#!/bin/bash

python /home/pi/lcd/char/print.py "Music stopped.

I'm a super PI."

killall mplayer

Connecting the inputs: the button and the wheel

I’m not very good with electronics, so i won’t explain this part very deeply, also because that’s pretty basic.

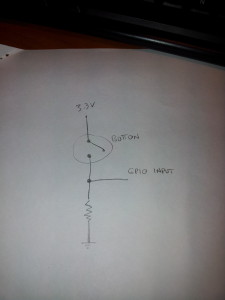

The scheme to read the state of a button should be something like this:

The mouse wheel should have three connectors, and it behave like two button together, with the central pin in common. The only thing to know about it is that the two “buttons” are switched on and off while you turn the wheel, but one of them is triggered a little before the other, giving you information about the direction.

I decided to use a wheel (or two button) and an additional button to provide basic input for a “menu” structured OS for the radio, which is the minimal input for a fair usability.

Reacting to the inputs

The last thing to do is to react to the input of the user, writing the real “operating system” for our radio. I wrote this in python, and the interface is really minimal, providing the wheel to control the volume and the button to skip to the next song.

As usual the code is really bad written, and it should be used only to understand how it works. I encourage you to write a real interface, you could really do anything with that.

The script (bad written of course) is this:

import RPi.GPIO as GPIO

import time

import os

from Adafruit_CharLCD import Adafruit_CharLCD

lcd = Adafruit_CharLCD()

lcd.begin(16,1)

lcd.clear()

lcd.message("Hello!nI'm the SuperPI.")

def left():

lcd.clear()

lcd.message("Volume up")

os.system("amixer set PCM 5+");

print "left"

def right():

lcd.clear()

lcd.message("Volume down")

os.system("amixer set PCM 5-");

print "right"

def pressed():

print "pressed"

lcd.clear()

lcd.message("Skipping tonnext song")

f = open('/home/pi/fifofile','w')

f.write('seek 100 1n')

f.close()

GPIO.setmode(GPIO.BCM)

GPIO.setup(4, GPIO.IN)

GPIO.setup(18,GPIO.IN)

GPIO.setup(2,GPIO.IN)

last_a = False

last_b = False

last_c = True

paired = True

i=0

while True:

a = GPIO.input(4)

b = GPIO.input(18)

c = GPIO.input(2)

#print "4: "+str(a)+ " 18: " + str(b)

if c == False and c != last_c:

pressed()

if a != b and paired:

if a != last_a:

left()

else:

right()

paired = False

else:

paired = True

i+=1

last_a = a

last_b = b

last_c = c

time.sleep(0.01)

Note that it uses the previous created fifo to send to mplayer the command to skip to the next song.

Assembling

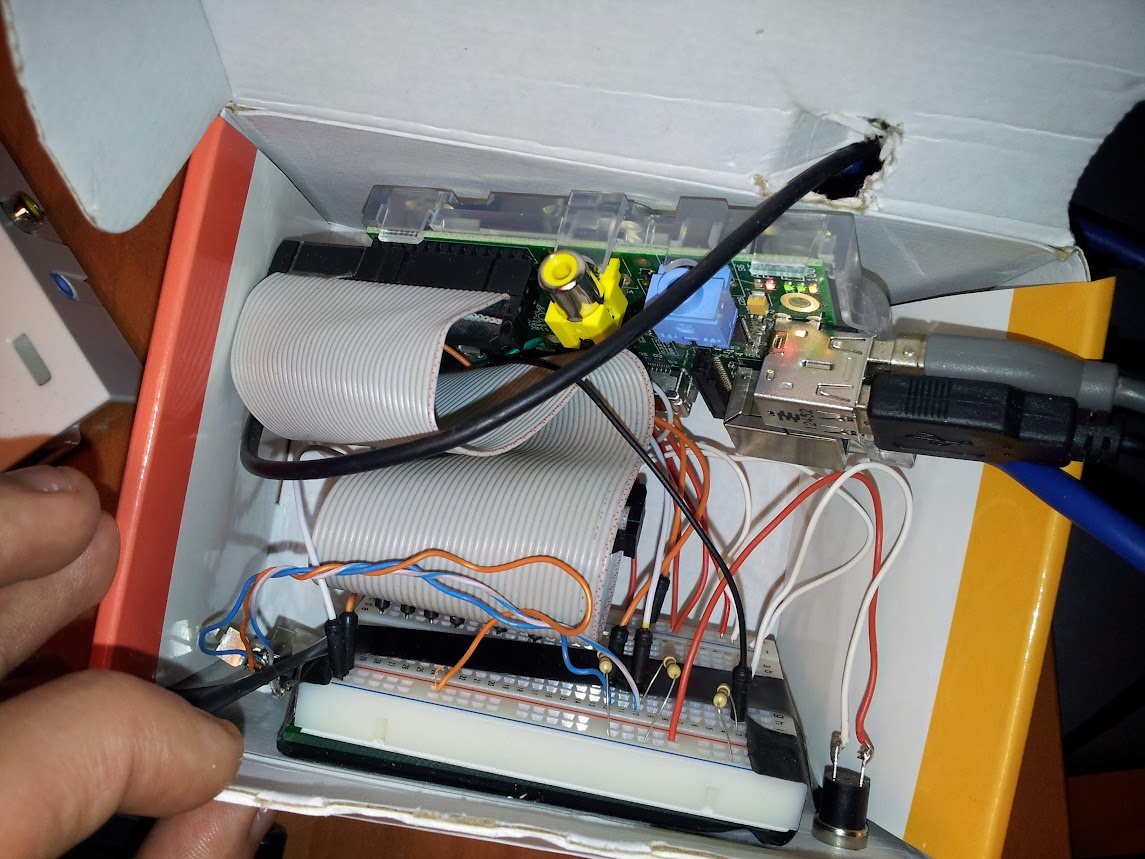

I’m sure that anyone can assemble this project better than I did. And to prove it i’ll show a picture:

The video

Here’s a little video (in italian) in which I show the (few) functionalities of the radio:

I’m thinking, when I have some spare time, to extend its functions by attaching a webcam and trying to do something more complex, given the wide range of possibilities the PI can offer.

Raspberry PI as FM AUX input for your car radio

When i read about turning your Raspberry PI into a FM transmitter i was really excited. That’s real hacking!

I decided to try to use this hack to provide an aux input for car radio which doesn’t have it, and i succeeded (quite well).

I downloaded the FM transmitter C program from the link above and figured out a way to drive my usb audio card mic input to the program, to be able to broadcast that in FM: wonderful.

The result was a short range Raspberry PI powered FM transmitter, and i was able to tune my radio to the right frequency (in this case 100.00 MHz) and to listen for the music i was playing from my device.

Since there has been some interest about how I made this, I post here the little scripts I wrote for this project, even if they are not written very well, and may not be easy to understand and adapt to your needs. Here they are:

https://drive.google.com/folderview?id=0B8r7_UiGnBnUUW9zMXVpMjB1akU&usp=sharing

Raspberry PI powered Face Follower Webcam

Hi everybody, here’s my new Raspberry PI project: the face follower webcam!

When I received my first raspberry, I understood it would have been very funny to play with real world things: so i tried to make the PI react with environment, and I played a lot with speech recognition, various kind of sensors and so on. But then I suddenly realized that the real funny thing would have been to make it see. I also understood that real-time processing a webcam input would be a tough task for a device with those tiny resources.

Now i discovered that this is a tough task, but the raspberry can achieve it, as long as we keep things simple.

OpenCV Libraries

As soon as I started playing with webcams, I decided to look for existing libraries to parse the webcam input and to extract some information from it. I soon came across the OpenCV libraries, beautiful libraries to process images from the webcam with a lot of features (which I didn’t fully explore yet), such as face detection.

I started trying to understand if it was possible to make them work on the PI, and yes, someone had already done that, with python too, and it was as easy as a

sudo apt-get install python-opencv

After some tries, i found out that the pi was slow in processing the frames to detect faces, but only because it buffered the frames and I soon had a workaround for that.. and soon I was done: face detection on raspberry PI!

All you need to try face detection on your own is the python-opencv package and the pattern xml file haarcascade_frontalface_alt.xml, i guess you can find it easily in the Internet.

I started with a script found here and then I modified it for my purposes.

The Project

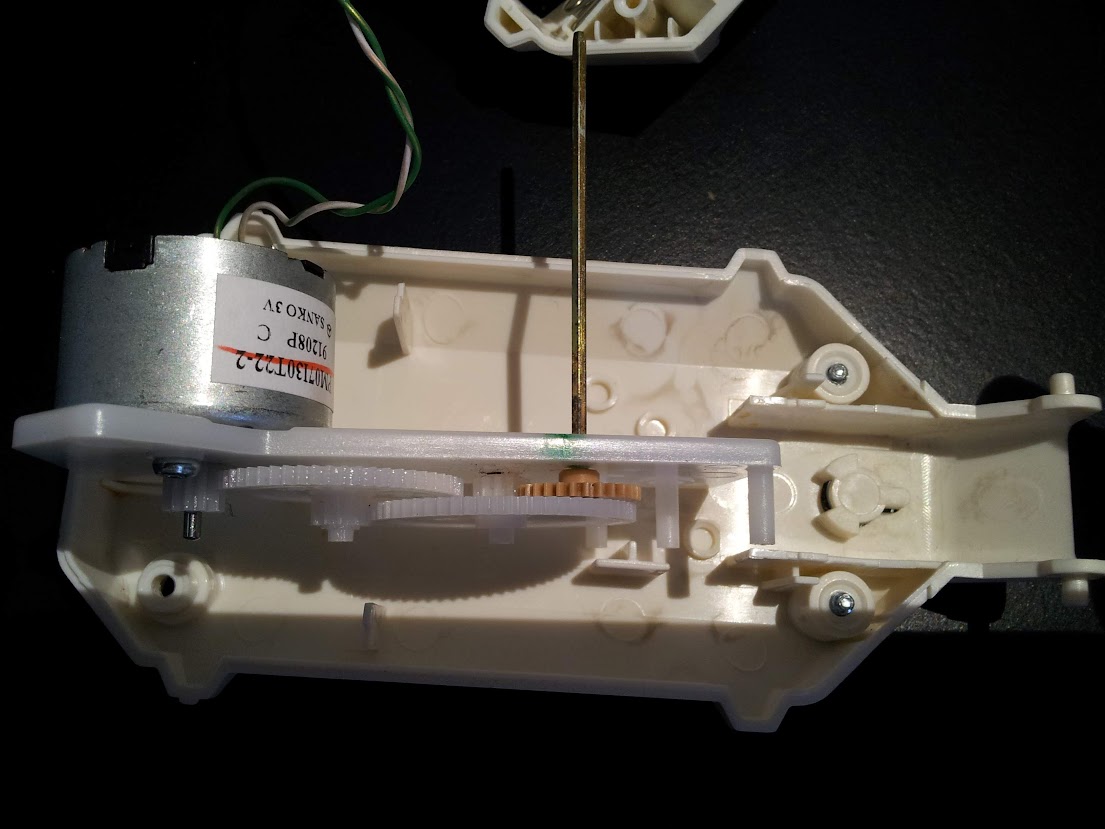

Eventually, I decided to build with my PI a motor driven webcam which could “follow” a face detected by opencv in the webcam stream. I disassembled a lot of things before finding a suitable motor but then I managed to connect it to the Raspberry GPIO (I had to play a little with electronics here, because i didn’t have a servo motor — if you need some information about this part, i’ll be happy to provide it, but i’m not so good with electronics thus the only thing I can say is that it works). Here’s a picture of the circuit:

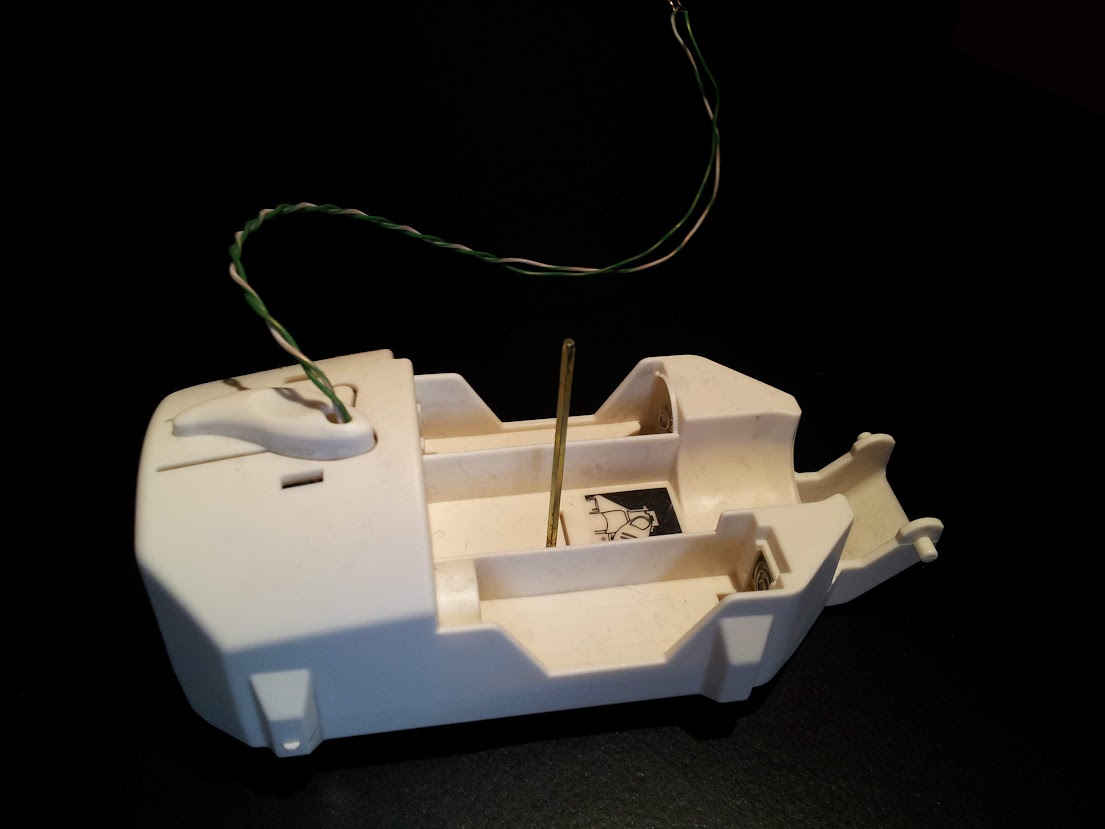

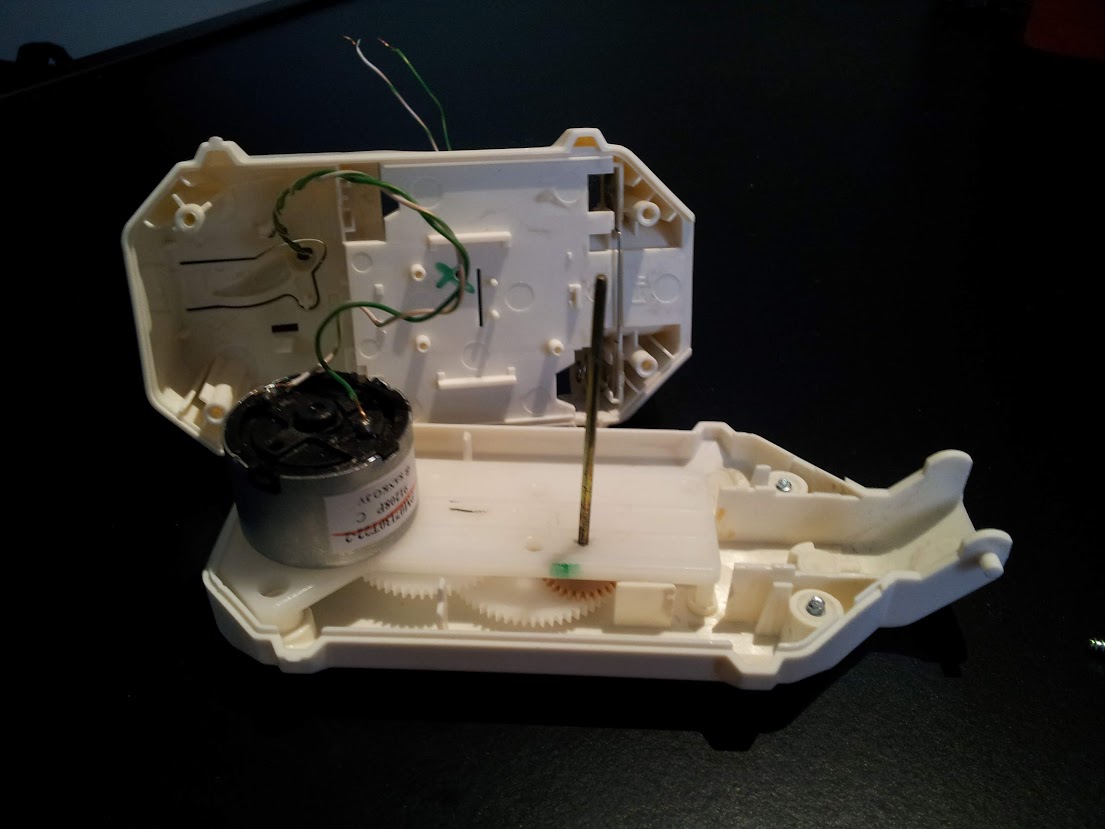

And here are some photos of the motor, to which I mounted an additional gear wheel.

Once the motor worked, I attached it to a webcam which i plugged into the PI, and then I joined the previous linked script with some GPIO scripting to achieve the goal, here is the result:

import RPi.GPIO as GPIO

import time,sys

import cv,os

from datetime import datetime

import Image

#GPIO pins i used

OUT0 = 11

OUT1 = 13

out0 = False #!enable line: when it's false, the motor turns, when it's true, it stops

out1 = False #!the direction the motor turns (clockwise or counter clockwise, it depends on the circuit you made)

def DetectFace(image, faceCascade):

min_size = (20,20)

image_scale = 2

haar_scale = 1.1

min_neighbors = 3

haar_flags = 0

# Allocate the temporary images

grayscale = cv.CreateImage((image.width, image.height), 8, 1)

smallImage = cv.CreateImage(

(

cv.Round(image.width / image_scale),

cv.Round(image.height / image_scale)

), 8 ,1)

# Convert color input image to grayscale

cv.CvtColor(image, grayscale, cv.CV_BGR2GRAY)

# Scale input image for faster processing

cv.Resize(grayscale, smallImage, cv.CV_INTER_LINEAR)

# Equalize the histogram

cv.EqualizeHist(smallImage, smallImage)

# Detect the faces

faces = cv.HaarDetectObjects(

smallImage, faceCascade, cv.CreateMemStorage(0),

haar_scale, min_neighbors, haar_flags, min_size

)

# If faces are found

if faces:

#os.system("espeak -v it salve")

for ((x, y, w, h), n) in faces:

return (image_scale*(x+w/2.0)/image.width, image_scale*(y+h/2.0)/image.height)

# the input to cv.HaarDetectObjects was resized, so scale the

# bounding box of each face and convert it to two CvPoints

pt1 = (int(x * image_scale), int(y * image_scale))

pt2 = (int((x + w) * image_scale), int((y + h) * image_scale))

cv.Rectangle(image, pt1, pt2, cv.RGB(255, 0, 0), 5, 8, 0)

return False

def now():

return str(datetime.now());

def init():

GPIO.setmode(GPIO.BOARD)

GPIO.setup(OUT0, GPIO.OUT)

GPIO.setup(OUT1, GPIO.OUT)

stop()

def stop(): #stops the motor and return when it's stopped

global out0

if out0 == False: #sleep only if it was moving

out0 = True

GPIO.output(OUT0,out0)

time.sleep(1)

else:

out0 = True

GPIO.output(OUT0,out0)

def go(side, t): #turns the motor towards side, for t seconds

print "Turning: side: " +str(side) + " time: " +str(t)

global out0

global out1

out1 = side

GPIO.output(OUT1,out1)

out0 = False

GPIO.output(OUT0,out0)

time.sleep(t)

stop()

#getting camera number arg

cam = 0

if len(sys.argv) == 2:

cam = sys.argv[1]

#init motor

init()

capture = cv.CaptureFromCAM(int(cam))

#i had to take the resolution down to 480x320 becuase the pi gave me errors with the default (higher) resolution of the webcam

cv.SetCaptureProperty(capture, cv.CV_CAP_PROP_FRAME_WIDTH, 480)

cv.SetCaptureProperty(capture, cv.CV_CAP_PROP_FRAME_HEIGHT, 320)

#capture = cv.CaptureFromFile("test.avi")

#faceCascade = cv.Load("haarcascades/haarcascade_frontalface_default.xml")

#faceCascade = cv.Load("haarcascades/haarcascade_frontalface_alt2.xml")

faceCascade = cv.Load("/usr/share/opencv/haarcascades/haarcascade_frontalface_alt.xml")

#faceCascade = cv.Load("haarcascades/haarcascade_frontalface_alt_tree.xml")

while (cv.WaitKey(500)==-1):

print now() + ": Capturing image.."

for i in range(5):

cv.QueryFrame(capture) #this is the workaround to avoid frame buffering

img = cv.QueryFrame(capture)

hasFaces = DetectFace(img, faceCascade)

if hasFaces == False:

print now() + ": Face not detected."

else:

print now() + ": " + str(hasFaces)

val = abs(0.5-hasFaces[0])/0.5 * 0.3

#print "moving for " + str(val) + " secs"

go(hasFaces[0] < 0.5, val)

#cv.ShowImage("face detection test", image)

Of course I had to play with timing to make the webcam turn well, and the timings like everything strictly connected to the motor depends on your specific motor and/or circuit.

Here’s the video (in italian):

Raspberry PI controlled webcam turning system

I’m writing to present my new Raspberry PI powered project, a simple motor controlled directly from the Raspberry’s GPIO. I’m very excited to think about the raspberry actually moving things.

My initial idea was building a webcam which could rotate to follow a face or an object, but i believe the Raspberry PI’s CPU is too limited for this purpose. Anyway i decided to try to make a webcam turning system which still is pretty cool.

To do that I disassembled an old cd player, and used the laser moving motor system and some other recovered pieces to make it turn the webcam.

The following video (in italian) shows how it worked (not really well but hey, it does turn the webcam!).

The interesting thing in this project is that this kind of motors (from an old cd player) are capable of turning a webcam (which had been reduced in weight as much as i could) being powered only by the GPIO port. Not the 3V or 5V pins, which provide pretty much current, but the very GPIO pins of the board.

At this stage it is of course completely unusable, but like almost every project i’ve made so far, it just wants to be a proof of concept.

Raspberry PI powered Door Alarm

This is my last Raspberry PI project. It’s a simple alarm driven by a home-made door sensor which plays an alarm sound and sends me a message via twitter.

The video is in italian.

Here’s the python code which uses the tweepy library:

#!/usr/bin/python

# Import modules for CGI handling

import cgi, cgitb, time

import RPi.GPIO as GPIO

import os

import tweepy

import traceback

#fill these variables with your twitter app data

oauth_token = **

oauth_token_secret = **

consumer_key = **

consumer_secret = **

auth = tweepy.OAuthHandler(consumer_key, consumer_secret)

auth.set_access_token(oauth_token, oauth_token_secret)

api = tweepy.API(auth)

def send_tweet( api, what ):

try:

print "Tweeting '"+what+"'.."

now = int(time.time())

api.update_status('@danielenick89 '+ str(now) +': '+ what)

print "Tweet id "+str(now)+ " sent"

except:

print "Error while sending tweet"

traceback.print_exc()

GPIO.setmode(GPIO.BOARD)

GPIO.setup(11, GPIO.IN)

state = GPIO.input(11)

#print "Content-type: text/htmlnn";

while not GPIO.input(11):

time.sleep(0.3)

#print "Activated.."

while GPIO.input(11):

time.sleep(0.3)

send_tweet(api, "RasPI: Unauthorized door opening detected.")

os.system("mplayer alarm2/alarm.mp3");

In order to use this code you have to create an application on twitter, and then fill the variables (oauth_token, oauth_token_secret, consumer_key, consumer_secret) with the relative value you can find in the site after creating the app.

Embedding Google+ Videos in your Web Page

UPDATE: This method works but the embedded video expires after about a month, and the code must be updated. It might be possible to try to modify the embedded code to make it expire later, but I didn’t test it.

With the introduction of Google+ instant upload, many people started automatically backing up their photos and videos in Google Plus (and many started actively using G+ for this reason).

This is of course great, but there’s also a limitation: you can share the uploaded photos/videos only in Google Plus. I think it works this way to increase the popularity of the social network but that’s not very comfortable, expecially when you have ~300Mb videos already uploaded but you can’t even move them to Youtube, to share and embed them where you do prefer (eg. facebook, twitter, personal website).

I searched the Internet a lot before understanding that probably Google doesn’t want you to share Google Plus videos outside its social network, and i decided to “manually” find a way.

After a little trying i found out how to to embed Google+ videos in your personal website. I found that you can easily extract the html code to embed the video directly from G+:

The steps are few and pretty easy (i assume you use Chrome/Chromium browser because it is very comfortable for inspecting the DOM, but it can certainly be done in Firefox too):

- Go to Google Plus, find the video you want to embed and simply play it.

- When the video is playing, pause it and inspect the html (you can do this with chrome/chromium browsers by right-clicking -> Inspect Element). Be sure to right click near the video, but not on it (you can’t, it’s flash content)

- Search the DOM tree for the “<embed>” tag (See the picture)

- Then right click on it -> Copy as HTML (on Chrome/Chromium)

- Now you have an HTML code representing the video: we’re almost done! It should look like this:

<object style=”width: 1280px; height: 758px;” width=”1280″ height=”758″ classid=”clsid:d27cdb6e-ae6d-11cf-96b8-444553540000″ codebase=”http://download.macromedia.com/pub/shockwave/cabs/flash/swflash.cab#version=6,0,40,0″ bgcolor=”#fff”><param name=”src” value=”//www.youtube.com/get_player?enablejsapi=1&cc_load_policy=2″ /><param name=”allowfullscreen” value=”true” /><param name=”allowscriptaccess” value=”always” /><param name=”scale” value=”noScale” /><param name=”wmode” value=”opaque” /><param name=”flashvars” value=”fmt_list=22%2F1280x720%2F9%2F0%2F115%2C35%2F854x480%2F9%2F0%2F115%2C34%2F640x360%2F9%2F0%2F115%2C5%2F426x240%2F7%2F0%2F0&fmt_stream_map=22%7Chttp%3A%2F%2Fredirector.googlevideo.com%2Fvideoplayback%3Fid%3D10af9fef8ab88134%26itag%3D22%26source%3Dpicasa%26cmo%3Dsensitive_content%3Dyes%26ip%3D0.0.0.0%26ipbits%3D0%26expire%3D1363183661%26sparams%3Did%252Citag%252Csource%252Cip%252Cipbits%252Cexpire%26signature%3D8477241CE149151477AC7B259B31E65C94DBCA32.B804A52B1EDAD148A6DFDC7B417E3A922CC0B2CF%26key%3Dlh1%2C35%7Chttp%3A%2F%2Fredirector.googlevideo.com%2Fvideoplayback%3Fid%3D10af9fef8ab88134%26itag%3D35%26source%3Dpicasa%26cmo%3Dsensitive_content%3Dyes%26ip%3D0.0.0.0%26ipbits%3D0%26expire%3D1363183661%26sparams%3Did%252Citag%252Csource%252Cip%252Cipbits%252Cexpire%26signature%3DB6AFABA155F7C5294D346C9EF74763382E0A9A3A.7B6B5451FA3051A6A28AFB37C923501CA93FEE8C%26key%3Dlh1%2C34%7Chttp%3A%2F%2Fredirector.googlevideo.com%2Fvideoplayback%3Fid%3D10af9fef8ab88134%26itag%3D34%26source%3Dpicasa%26cmo%3Dsensitive_content%3Dyes%26ip%3D0.0.0.0%26ipbits%3D0%26expire%3D1363183661%26sparams%3Did%252Citag%252Csource%252Cip%252Cipbits%252Cexpire%26signature%3DCCA2684867495F12648E4B0D52504D7330BBDFFB.A93A0E1B7C81630C878E1E8B8AE6EC1330008D67%26key%3Dlh1%2C5%7Chttp%3A%2F%2Fredirector.googlevideo.com%2Fvideoplayback%3Fid%3D10af9fef8ab88134%26itag%3D5%26source%3Dpicasa%26cmo%3Dsensitive_content%3Dyes%26ip%3D0.0.0.0%26ipbits%3D0%26expire%3D1363183661%26sparams%3Did%252Citag%252Csource%252Cip%252Cipbits%252Cexpire%26signature%3D1DADD9325E60ABC22DDC8FE45B84E89AC03BCB89.223CC73A0690DFD6584BF8BEFBA379135311CD94%26key%3Dlh1&video_id=picasacid&fs=1&hl=it&autoplay=1&ps=picasaweb&playerapiid=uniquePlayerId&t=1&vq=large&auth_timeout=86400000000&ttsurl=https://plus.google.com/_/photos/timedtext/100072144891672956015/5841126284110989458?&ttspolicyfile=https://plus.google.com/_/photos/timedtext/crossdomain.xml” /><embed style=”width: 1280px; height: 758px;” width=”1280″ height=”758″ type=”application/x-shockwave-flash” src=”//www.youtube.com/get_player?enablejsapi=1&cc_load_policy=2″ allowfullscreen=”true” allowscriptaccess=”always” scale=”noScale” wmode=”opaque” flashvars=”fmt_list=22%2F1280x720%2F9%2F0%2F115%2C35%2F854x480%2F9%2F0%2F115%2C34%2F640x360%2F9%2F0%2F115%2C5%2F426x240%2F7%2F0%2F0&fmt_stream_map=22%7Chttp%3A%2F%2Fredirector.googlevideo.com%2Fvideoplayback%3Fid%3D10af9fef8ab88134%26itag%3D22%26source%3Dpicasa%26cmo%3Dsensitive_content%3Dyes%26ip%3D0.0.0.0%26ipbits%3D0%26expire%3D1363183661%26sparams%3Did%252Citag%252Csource%252Cip%252Cipbits%252Cexpire%26signature%3D8477241CE149151477AC7B259B31E65C94DBCA32.B804A52B1EDAD148A6DFDC7B417E3A922CC0B2CF%26key%3Dlh1%2C35%7Chttp%3A%2F%2Fredirector.googlevideo.com%2Fvideoplayback%3Fid%3D10af9fef8ab88134%26itag%3D35%26source%3Dpicasa%26cmo%3Dsensitive_content%3Dyes%26ip%3D0.0.0.0%26ipbits%3D0%26expire%3D1363183661%26sparams%3Did%252Citag%252Csource%252Cip%252Cipbits%252Cexpire%26signature%3DB6AFABA155F7C5294D346C9EF74763382E0A9A3A.7B6B5451FA3051A6A28AFB37C923501CA93FEE8C%26key%3Dlh1%2C34%7Chttp%3A%2F%2Fredirector.googlevideo.com%2Fvideoplayback%3Fid%3D10af9fef8ab88134%26itag%3D34%26source%3Dpicasa%26cmo%3Dsensitive_content%3Dyes%26ip%3D0.0.0.0%26ipbits%3D0%26expire%3D1363183661%26sparams%3Did%252Citag%252Csource%252Cip%252Cipbits%252Cexpire%26signature%3DCCA2684867495F12648E4B0D52504D7330BBDFFB.A93A0E1B7C81630C878E1E8B8AE6EC1330008D67%26key%3Dlh1%2C5%7Chttp%3A%2F%2Fredirector.googlevideo.com%2Fvideoplayback%3Fid%3D10af9fef8ab88134%26itag%3D5%26source%3Dpicasa%26cmo%3Dsensitive_content%3Dyes%26ip%3D0.0.0.0%26ipbits%3D0%26expire%3D1363183661%26sparams%3Did%252Citag%252Csource%252Cip%252Cipbits%252Cexpire%26signature%3D1DADD9325E60ABC22DDC8FE45B84E89AC03BCB89.223CC73A0690DFD6584BF8BEFBA379135311CD94%26key%3Dlh1&video_id=picasacid&fs=1&hl=it&autoplay=1&ps=picasaweb&playerapiid=uniquePlayerId&t=1&vq=large&auth_timeout=86400000000&ttsurl=https://plus.google.com/_/photos/timedtext/100072144891672956015/5841126284110989458?&ttspolicyfile=https://plus.google.com/_/photos/timedtext/crossdomain.xml” bgcolor=”#fff” /></object>

Now you can (if needed) modify some parameter you find in the code, i recommend to change the

autoplay=1

to prevent the video from autostarting when the page loads.

This is not a official way to share Google Plus videos, of course, so this method may not work in the future, or the videos may someday disappear. But so far, it has worked for me.

Raspberry PI controlled Light Clapper

This is one of my first Raspberry PI projects, and consists of a Raspberry connected to a microphone which detects an hand clap and then controls via GPIO a relay that powers the lamp.

The code used for the detecting the clap (which is not perfect, because it analyzes only the volume of the microphone) is this:

[actually this is not the same code used in the video above, it has been improved, in fact the delay between the two claps is reduced in this script]

The code has been written only to provide a proof-of-concept project, and it’s written joining various pieces of code found in the Internet, so don’t expect this code to be bug free or well written. It is not.

You should use it to understand the way it works and to write something better or to adapt it to your own project.

#!/usr/bin/python

import urllib

import urllib2

import os, sys

import ast

import json

import os

import getpass, poplib

from email import parser

import RPi.GPIO as GPIO

file = 'test.flac'

import alsaaudio, time, audioop

class Queue:

"""A sample implementation of a First-In-First-Out

data structure."""

def __init__(self):

self.size = 35

self.in_stack = []

self.out_stack = []

self.ordered = []

self.debug = False

def push(self, obj):

self.in_stack.append(obj)

def pop(self):

if not self.out_stack:

while self.in_stack:

self.out_stack.append(self.in_stack.pop())

return self.out_stack.pop()

def clear(self):

self.in_stack = []

self.out_stack = []

def makeOrdered(self):

self.ordered = []

for i in range(self.size):

#print i

item = self.pop()

self.ordered.append(item)

self.push(item)

if self.debug:

i = 0

for k in self.ordered:

if i == 0: print "-- v1 --"

if i == 5: print "-- v2 --"

if i == 15: print "-- v3 --"

if i == 20: print "-- v4 --"

if i == 25: print "-- v5 --"

for h in range(int(k/3)):

sys.stdout.write('#')

print ""

i=i+1

def totalAvg(self):

tot = 0

for el in self.in_stack:

tot += el

for el in self.out_stack:

tot += el

return float(tot) / (len(self.in_stack) + len(self.out_stack))

def firstAvg(self):

tot = 0

for i in range(5):

tot += self.ordered[i]

return tot/5.0

def secondAvg(self):

tot = 0

for i in range(5,15):

tot += self.ordered[i]

return tot/10.0

def thirdAvg(self):

tot = 0

for i in range(15,20):

tot += self.ordered[i]

return tot/5.0

def fourthAvg(self):

tot = 0

for i in range(20,30):

tot += self.ordered[i]

return tot/10.0

def fifthAvg(self):

tot = 0

for i in range(30,35):

tot += self.ordered[i]

return tot/5.0

def wait_for_sound():

GPIO.setmode(GPIO.BOARD)

GPIO.setup(11, GPIO.OUT)

# Open the device in nonblocking capture mode. The last argument could

# just as well have been zero for blocking mode. Then we could have

# left out the sleep call in the bottom of the loop

card = 'sysdefault:CARD=Microphone'

inp = alsaaudio.PCM(alsaaudio.PCM_CAPTURE,alsaaudio.PCM_NONBLOCK, card)

# Set attributes: Mono, 8000 Hz, 16 bit little endian samples

inp.setchannels(1)

inp.setrate(16000)

inp.setformat(alsaaudio.PCM_FORMAT_S16_LE)

# The period size controls the internal number of frames per period.

# The significance of this parameter is documented in the ALSA api.

# For our purposes, it is suficcient to know that reads from the device

# will return this many frames. Each frame being 2 bytes long.

# This means that the reads below will return either 320 bytes of data

# or 0 bytes of data. The latter is possible because we are in nonblocking

# mode.

inp.setperiodsize(160)

last = 0

max = 0

clapped = False

out = False

fout = open("/var/www/cgi-bin/clapper/killme", "w")

fout.write('0n');

fout.close()

queue = Queue();

avgQueue = Queue();

n = 0;

n2=0;

while True:

fin = open("/var/www/cgi-bin/clapper/killme", "r")

if fin.readline() == "1n":

break;

fin.close()

# Read data from device

l,data = inp.read()

if l:

err = False

volume = -1

try:

volume = audioop.max(data, 2)

except:

print "err";

err = True

if err: continue

queue.push(volume)

avgQueue.push(volume)

n = n + 1

n2 = n2 + 1

if n2 > 500:

avgQueue.pop()

if n > queue.size:

avg = avgQueue.totalAvg()

print "avg last fragments: " + str(avg)

low_limit = avg + 10

high_limit = avg + 30

queue.pop();

queue.makeOrdered();

v1 = queue.firstAvg();

v2 = queue.secondAvg();

v3 = queue.thirdAvg();

v4 = queue.fourthAvg();

v5 = queue.fifthAvg();

if False:

print "v1: "+str(v1)+"n"

print "v2: "+str(v2)+"n"

print "v3: "+str(v3)+"n"

print "v4: "+str(v4)+"n"

print "v5: "+str(v5)+"n"

#if v1 < low_limit: print str(n)+": v1 ok" #if v2 > high_limit: print str(n)+": v2 ok"

#if v3 < low_limit: print str(n)+": v3 ok" #if v4 > high_limit: print str(n)+": v4 ok"

#if v5 < low_limit: print str(n)+": v5 ok"

if v1 < low_limit and v2 > high_limit and v3 < low_limit and v4 > high_limit and v5 < low_limit:

print str(time.time())+": sgaMED"

out = not out

GPIO.output(11, out)

queue.clear()

n = 0

time.sleep(.01)

wait_for_sound()

The code was found on the Internet and then adapted for my purposes. It uses a standard USB microphone, and should work with most of the linux-compatible USB mics (I think even webcam integrated mics).

Infrared Webcam / Lamp project

I just modified a normal webcam to work with infrared light, following these instructions: http://www.hoagieshouse.com/IR/

Then i created a IR lamp using an incandescent light bulb (which provides almost all frequencies of light) which i filtered with some old photos negatives, which are a quite good IR-pass filter.

The video is in italian.

Blog

Questo è un posto di prova. Ho pensato che visto che anche chi non ha nulla da dire tiene un blog, anch’io avrei potuto farlo. Non sono tanto sicuro che sia vero però.